Serving sglang on Vast

Serving Online Inference with SGLang on Vast.ai

Background

SGLang is an open-source framework for serving language models, focusing on throughput for serving and batch workloads. This makes it ideal for building apps that serve multiple users and need to scale. SGLang is used by many high-tech companies for serving their Language Models to the public, most notably X AI and their Grok Model.

SGLang provides an OpenAI-compatible server, allowing you to easily integrate it into chatbots and other applications.

As companies develop their AI products, they often face challenges like rate limits and high costs when using these models. With SGLang on Vast, you can run your own models in the form factor you need, at a much more affordable price point. As inference demand grows with agents and complex workflows, SGLang on Vast excels in performance and affordability where it matters most.

This guide will show you how to set up SGLang to serve a language model on Vast. We reference a notebook that you can use here

Setup and Querying

First, set up your environment and Vast API key:

pip install --upgrade vastai

Once you create your account, go here to find your API key.

vastai set api-key <Your-API-Key-Here>

For serving a language model, we're looking for a machine with a static IP address, available ports to host on, and a single modern GPU with decent RAM, CUDA graphs take a lot of space and can affect deployment options for certain models. SGLang also requires CUDA version 12.2 or higher, so we'll filter for that as well. We will query the Vast API to get a list of these types of machines.

vastai search offers 'compute_cap > 800 gpu_ram > 40 num_gpus = 1 static_ip=true direct_port_count > 1 cuda_vers >= 12.2'

Deploying the Image

The easiest way to deploy this instance is to use the command line. Copy and paste a specific instance ID you choose from the list above into instance-id below. We'll also need to input the Huggingface API Token into <Huggingface-Token>.

vastai create instance <instance-id> --image lmsysorg/sglang:latest --env '-p 8000:8000 -e HF_TOKEN=<Huggingface-Token>' --disk 60 --args python3 -m sglang.launch_server --model-path meta-llama/Meta-Llama-3.1-8B-Instruct --host 0.0.0.0 --port 8000

Connecting and Testing

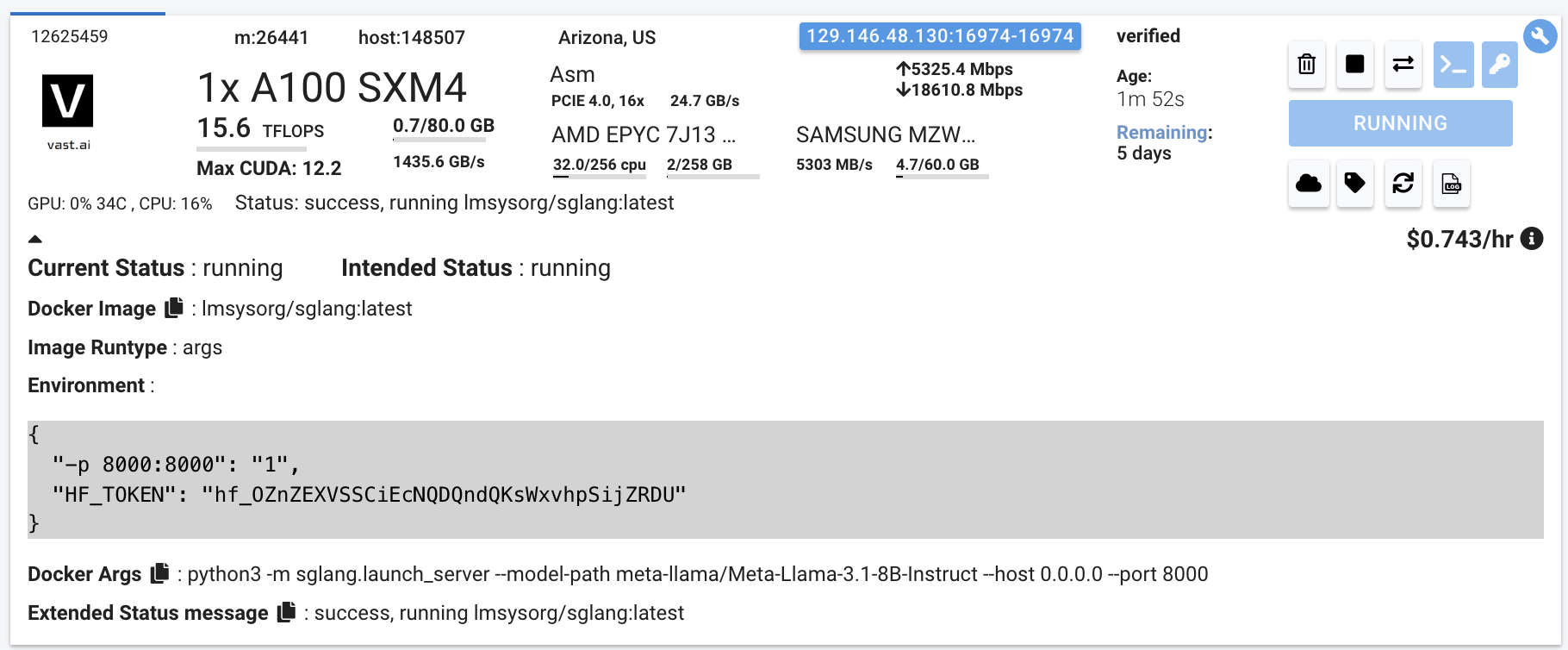

To connect to your instance, we'll first need to get the IP address and port number. Once your instance is done setting up, you should see something like this:

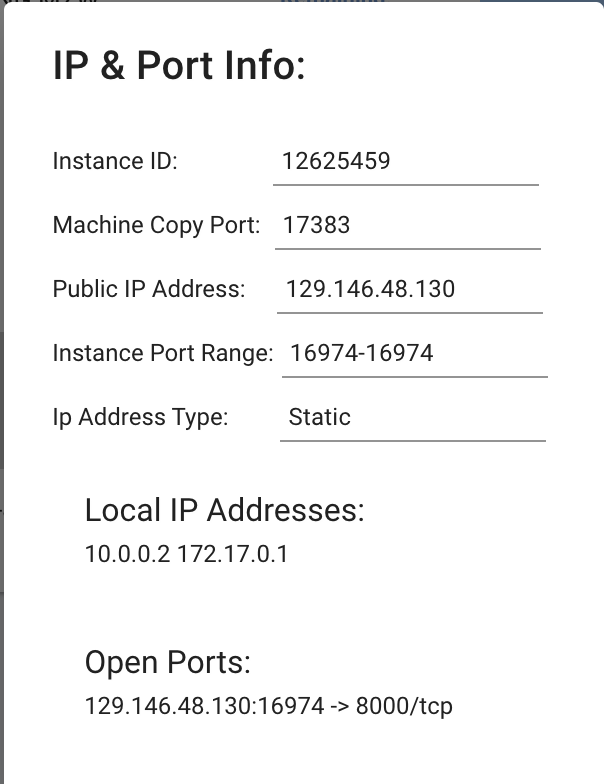

Click on the highlighted button to see the IP address and correct port for our requests.

We will copy over the IP address and the port into the cell below.

# This request assumes you haven't changed the model. If you did, fill it in the "model" value in the payload json below

curl -X POST http://<IP-Address>:<Port>/v1/completions -H "Content-Type: application/json" -d '{"model" : "meta-llama/Meta-Llama-3.1-8B-Instruct", "prompt": "Hello, how are you?", "max_tokens": 50}'

You will see a response from your model in the output. Your model is up and running on Vast!

In the notebook, we include ways to call this model with requests or OpenAI

Other things to look out for with other configurations

If you are downloading a model that needs authentication from the Hugging Face Hub, passing -e HF_token=<Your-Read-Only-Token> within Vast's --env variable string should help.

Sometimes the full context of a model can't be used given the space allocated for SGLang on the GPU + the model's size. In those cases, you might want to increase --gpu-memory-utilization, or decrease the max-model-len. Increasing --gpu-memory-utilization does come with CUDA OutOfMemory issues that can be hard to predict ahead of time.

We won't need either of these for this specific model and GPU configuration.

Testing

Copy the IP address from your instance once it is ready, and then we can use the following code to call it. Note that while your server might have ports ready, the model might not have downloaded yet as it is much larger this time. You can check the status of this via the logs to see when it has started serving.

import requests

headers = {

'Content-Type': 'application/json',

}

json_data = {

'model': 'meta-llama/Meta-Llama-3.1-8B-Instruct',

'prompt': 'Hello, how are you?',

'max_tokens': 50,

}

response = requests.post('http://<Instance-IP-Address>:<Port>/v1/completions', headers=headers, json=json_data)

print(response.content)

Or use OpenAI:

pip install openai

from openai import OpenAI

# Modify OpenAI's API key and API base to use SGLang's API server.

openai_api_key = "EMPTY"

openai_api_base = "http://<Instance-IP-Address>:<Port>/v1"

client = OpenAI(

api_key=openai_api_key,

base_url=openai_api_base,

)

completion = client.completions.create(model="meta-llama/Meta-Llama-3.1-8B-Instruct",

prompt="Hello, how are you?",

max_tokens=50)

print("Completion result:", completion)

Conclusions

Model inference is expensive, and leveraging more affordable compute/models makes a huge difference for engineering teams in terms of margins and shipping velocity.

Using SGLang on Vast is perfect for this, pairing Vast's access to affordable compute with the simplicity and state-of-the-art throughput of the SGLang backend.

SGLang is a great beginning to building Generative AI Apps. We will continue to explore using this tool more with Vast in future posts.

Llama-3 is already ready to go on Vast to start experimenting and building!